Stream Processing: How Real-Time Data Processing is Transforming Technology (Part 2)

Unveiling the power of real-time data through stream processing made simple.

In today's digital age, the ability to process and analyze data in real-time is more crucial than ever. From social media feeds to financial transactions, businesses need to handle continuous streams of information efficiently and accurately. This blog delves into the world of stream processing, exploring its concepts, challenges, and applications in a way that's accessible to everyone.

Understanding State, Streams, and Immutability

At the core of stream processing lies the idea of state—the current status of a system based on past events. Think of state as the end result of a series of actions. For instance, your bank account balance is the cumulative effect of all deposits and withdrawals you've made over time.

The Power of Immutable Events

Immutability means once something is created, it cannot be changed. In data processing, this translates to events being unalterable records. This concept isn't new; accountants have used immutable ledgers for centuries. Instead of erasing errors, they add correcting entries, ensuring a complete and transparent history.

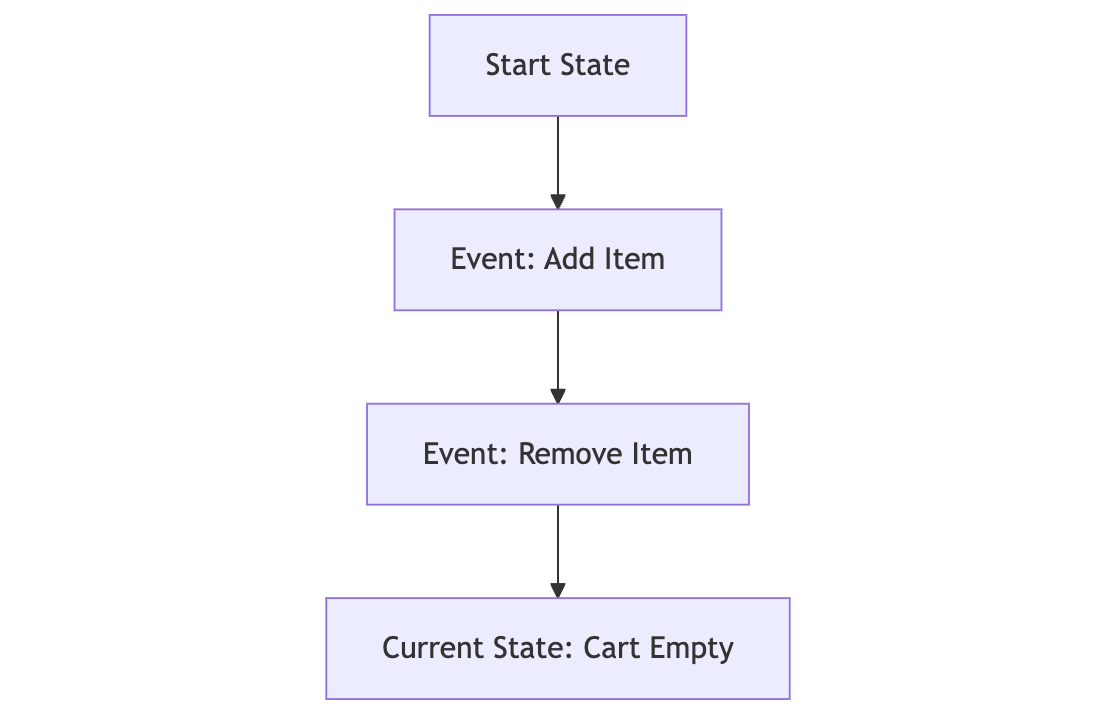

Example: Imagine an online shopping cart. If a customer adds an item and then removes it, both actions are recorded. Even though the cart is empty, the system knows what items were considered. This information is invaluable for understanding customer behavior and improving recommendations.

Simplifying Concurrency with Event Logs

Handling multiple events happening at the same time (concurrency) can be tricky. However, using immutable events simplifies this. Since each event is a standalone record, we only need to append it to our log. This reduces the complexity of coordinating multiple changes across different parts of a system.

Processing Streams: Turning Data into Action

Once we have a stream of events, what can we do with it? Generally, there are three paths:

Data Storage: Feed the events into a database or search index for querying.

User Notifications: Send real-time updates to users, like alerts or dashboard visuals.

Stream Processing: Analyze and transform streams to create new streams.

We'll focus on the third option—processing streams to generate valuable insights.

Applications of Stream Processing

Complex Event Processing (CEP)

CEP involves detecting patterns within streams of events. Similar to spotting trends in stock market data, CEP systems look for specific sequences of events.

Example: A security system might monitor login attempts. If multiple failed logins occur from different locations within a short time, it could indicate a hacking attempt.

Stream Analytics

This focuses on calculating metrics over time, like averages or totals.

Example: A video streaming service might track the number of viewers every minute to detect spikes in popularity or outages.

Searching Within Streams

Sometimes, we need to find events that match certain criteria as they happen.

Example: Real estate apps notify users instantly when a new listing matches their saved search preferences.

Message Passing and RPC

Remote Procedure Calls (RPC) allow programs to execute code across a network. In stream processing, we might interleave user queries with event streams to provide immediate responses.

Navigating the Challenges of Time in Streams

Time plays a crucial role in stream processing, but it's not always straightforward.

When Is a Window Complete?

In stream analytics, we often compute metrics over time windows (e.g., "the last 5 minutes"). However, events might arrive late due to network delays or processing lags. Determining when all relevant events have been received for a window is challenging.

Strategies:

Ignoring Late Events: Accept that some events might be missed.

Updating Past Results: Adjust calculations if late events arrive.

Whose Clock Is Correct?

Events can have timestamps from different sources, leading to inconsistencies.

Example: A mobile app records when a user performs an action, but if the phone's clock is wrong, the timestamp is inaccurate.

Solution: Record multiple timestamps:

Event Time: When the action occurred.

Send Time: When the event was sent.

Receive Time: When the server got the event.

This helps in adjusting and aligning events correctly.

Types of Windows in Stream Processing

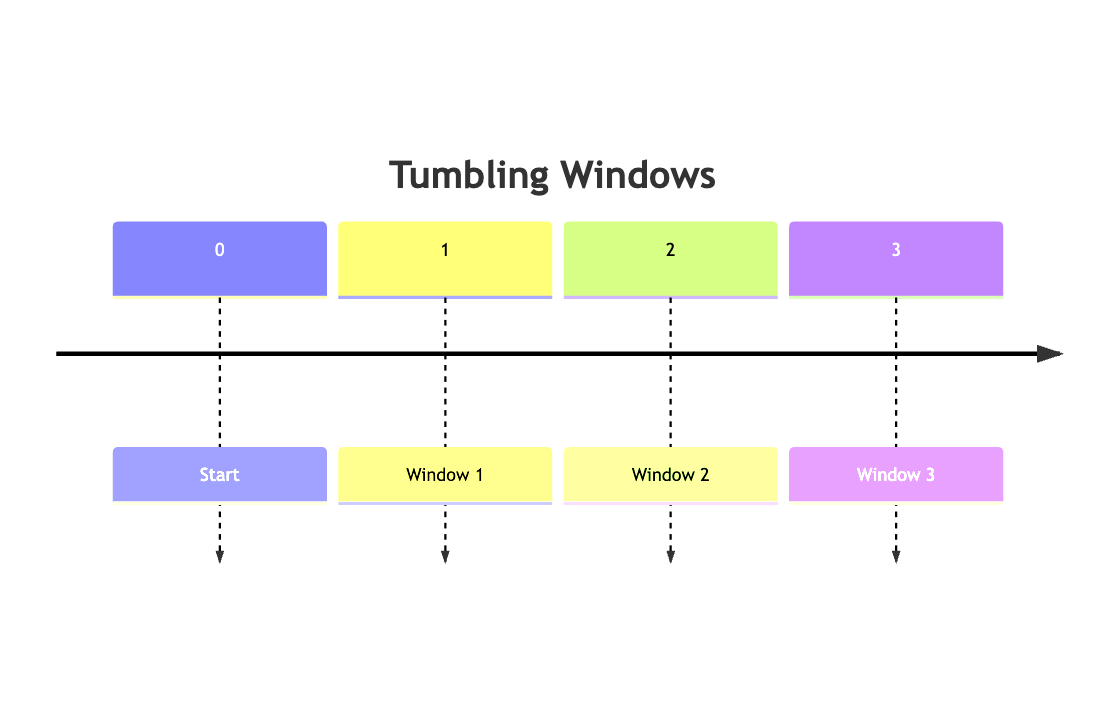

1. Tumbling Windows

Fixed, non-overlapping intervals.

Example: Counting website hits every minute.

2. Hopping Windows

Fixed intervals that can overlap, providing a smoother view.

Example: A 5-minute window updated every minute.

3. Sliding Windows

Windows that move with each event, capturing the last 'N' minutes.

4. Session Windows

Dynamic windows based on user activity, ending after inactivity.

Combining Streams: The Art of Stream Joins

Joining data streams allows us to enrich and correlate information.

Stream-Stream Joins

Combining two streams based on a common key within a time window.

Example: Matching user search queries with their subsequent clicks to measure ad effectiveness.

Stream-Table Joins

Enriching a stream by joining it with a static table.

Example: Adding user profile information to activity logs.

Table-Table Joins

Maintaining views by joining tables, often used in databases to keep data synchronized.

Example: Updating a user's social media feed when their friends post new content.

Ensuring Fault Tolerance in Stream Processing

Because streams are continuous, handling failures without data loss or duplication is vital.

Microbatching and Checkpointing

Breaking streams into small batches allows systems to process and recover data efficiently. If a failure occurs, the system can restart from the last checkpoint.

Atomic Operations

To prevent partial updates, systems use atomic operations, ensuring that either all changes are applied, or none are.

Idempotent Actions

Designing operations that have the same effect, even if repeated, helps in handling retries without adverse effects.

Example: Setting a user's status to "online" multiple times doesn't change the outcome.

Recovering State After Failures

Stream processors maintain state (like counts or averages). After a crash, they need mechanisms to rebuild this state, such as:

External Storage: Saving state in databases.

Replication: Duplicating state across multiple nodes.

Conclusion

Stream processing is a powerful tool that enables real-time data handling, providing businesses with timely insights and responsiveness. By understanding its core concepts—like immutability, event patterns, time challenges, and fault tolerance—we can build robust systems that meet the demands of modern applications.

As technology continues to evolve, mastering stream processing will be essential for anyone looking to leverage the full potential of continuous data.

Copyright Notice

© 2024 trnquiltrips. All rights reserved.

This content is freely available for academic and research purposes only. Redistribution, modification, and use in any form for commercial purposes is strictly prohibited without explicit written permission from the copyright holder.

For inquiries regarding permissions, please contact trnquiltrips@gmail.com